Project

An interactive educational immersive space mostly for children

Location

Optometry Center of Peking, China

Managed by

Xiang Gao (高翔)

Equipment specifications

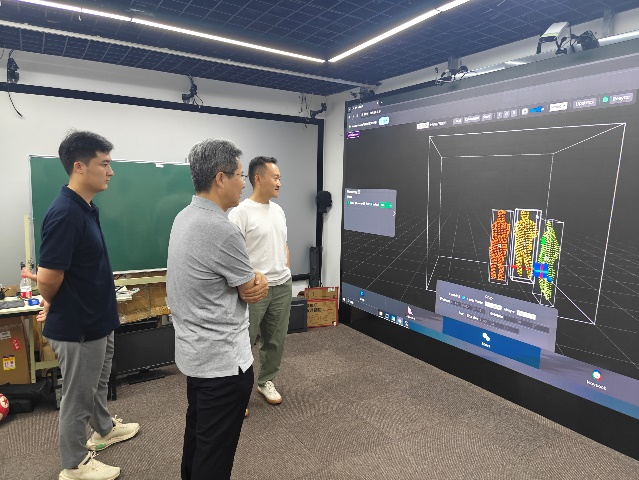

Portable servers + 2x Femto mega

Unreal + ndisplay

Takeaway

- Technical : Low non technical ceiling Orbbec Femto POE integration with a portable server

- Interactive design : Clever use / hack of a “Virtual production” or “mixed reality” perspective effect from the user perspective.

- Interactive design : Automated installation from participants triggering the experience

Concept

A fun and educational interactive immersive space designed mostly for Children.

Technical setup

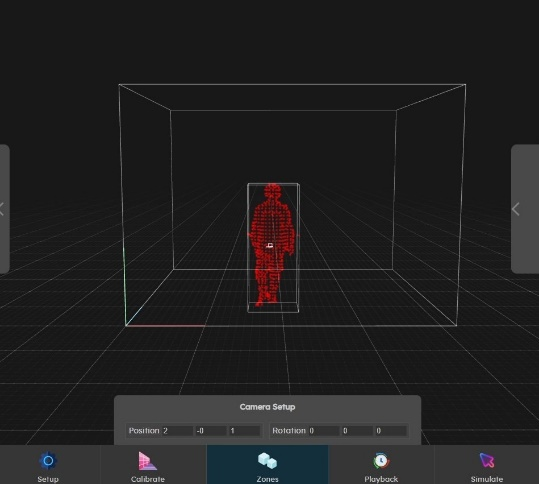

The entire system is based on Augmenta Tracking technology. The experience needs to quick

and straightforward without any introduction, neither wearable nor attachments alike. Children simply just walk in for a few minutes and out.

Augmenta provides the follow which are the key feature used:

- System origin point

- Relative position of the tracked person

- Individual tracking IDs among multiple tracking targets

- Expandability

- Unreal Engine support

Two sensor unit are used to cover the volume. The person who enters (prime person) the tracking area first will be granted the perspective from of the virtual camera in the scene. Any later comers can stay without disturbing the experiences, thanks to the Tracking IDs provided. Whenever the prime person leaves, the person holds the next ID will automatically get the perspective shifted on. With Augmenta technology the whole experiences stay seamless.

Content

Unreal Engine is used to generate all the graphic and running programs. The main

configurations are

- Augmenta with tracking Unreal plugin

- Unreal Engine with ndisplay

It offers a simple process to feed UE Livelink module with Augmenta tracking data. Here

is the process:

- Complete the configuration in Augmenta for tracking

- Setup output protocol (Unreal)

- Define destination IP address and ports

- Activate Augmenta plugin within Unreal Engine editor

Quick and straight forward process to get tracking data into Unreal Engine. The entire

integration with Augmenta will have its own document in the future.

It is a generate explanation here.

Unreal ndisplay is most likely the best option for small scale installation reason

being:

- No cost, no need any kinds of media server for projection mapping

- Native in the engine which makes the development straightforward

- Large number of educational material and strong community available

- Great real time graphic rendering capability

Interactive design

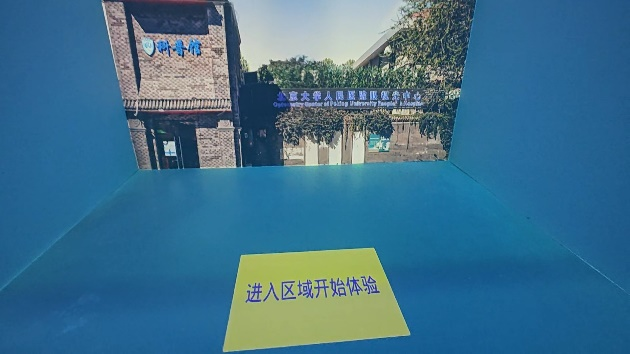

The experiences uses the participant position to trigger animations, voices, stage changes and more.

By simply step on the yellow area, the participant will automatically start the experience, without any controller or keyboard button presses.

The approach for immersive experience focuses on first person visual perspective. When

virtual camera perspective properly aligned with participant’s real vision perspective, it creates a depth illusion. This illusion is the main concept that we build the experience on.

Another example here when walking close to the character a different animation will be triggered.

Different kinds of interactions are made possible with Augmenta tracking technology and are easy to use.

Conclusion

In this project, Augmenta did facilitate

- Easy and efficient technical deployment (Ready to deploy equipment and interface)

- Easy and efficient content creation (Simulator + Unreal plugin)

Augmenta position, tracking and bounding box data were used for

- Perspective effects by detecting the head position (top of bound box with an offset + tracking)

- Zone triggering (experience start, animations, triggers, interaction) from position data